I wrote in an column called "Measuring Success," that unless a training group was earning increased funding and promotions, all other metrics were fairly irrelevant.

With that idea firmly in place, it is worth discussing a slightly less Machiavellian point.

Using hard "excel-able" metrics to measure increases in [fill in blank] that came as a direct result of formal learning programs are great, but always difficult, especially as when dealing with Big Skills.

Many programs use other, sub-optimal options.

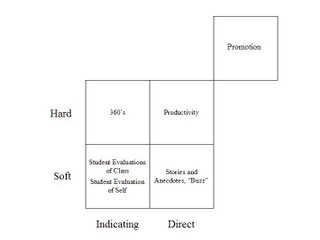

- Hard, Indicating (360's; business results)

- Soft, Direct (anecdotal)

- Soft, Indicating (students evaluations of course, self)

We have an assumption that hard/indirect is better than soft/direct (I think), and that seems a shame.

We also have an assumption that it is better to impact everybody in class in a little way, than a few people out of a class in a transformation way. That logic might be right, but we should accept it consciously not unconsciously.

No comments:

Post a Comment